Introduction

Fake news is an umbrella term for unchecked news articles that may contain untrue or misrepresented facts with the intention of either influencing readers opinions or making revenue from advertising. In recent decades, with the rise of the internet and the ease of publishing and distributing content, the world has seen a rise in the amount of fake news circulating online. In this article, we’ll discuss more about fake news, why it must be distinguished from real news, and how we can do it at scale using Python.

The Importance of Detecting Fake News

While a trained journalist or a reader with enough exposure can tell the difference between fake and real news, most of the general population may not be equipped or have the time to cross-check news for legitimacy. Hence, social media platforms and other consumer apps try to analyze the content and label any news that could be potentially fake. This is helpful to the readers.

As for engineers and data analysts, it is important that we are able to work with quality data that does not contain fake news. Now that AI models such as LLMs are being widely deployed for various text analysis tasks including news analysis, fake news can severely skew our analysis or cause LLMs to give us inaccurate or undesirable outputs. For these kinds of use-cases, we are usually working with thousands or millions of news articles, and it is not feasible to manually go through all of them and filter out the fake news. Hence, writing a computer program that classifies news as fake or real comes in handy, as it can process hundreds of articles in a matter of seconds.

Python is a friendly yet powerful general-purpose language and is widely used for data analysis workflows. It is hugely popular in the community for machine-learning applications, which means there are libraries available for implementing machine learning algorithms and other routine tasks such as splitting the dataset into training and testing sets, evaluating model performance, etc. Hence, we’ll be using Python for this tutorial.

Choosing the Dataset

For building any classifier, we need to have a quality dataset. The dataset needs to have a good representation of all categories. In our case, the categroies would be ‘fake’ and ‘real’ news. Next, the sample size also needs to be big enough to avoid overfitting and to be split into training and testing datasets. It is a standard practice to train the model on one part of the data and evaluate it on a different part of the data or a different dataset altogether.

One reliable source of clean, trustworthy news data is our very own News API by NewsCatcher. We could use some real news articles from this API, along with an equally sized dataset of fake news. However, to keep things simple, for this tutorial we’ll be using a dataset from Kaggle uploaded by Emine Bozkus made specially for the purpose of training a fake news detection model. This dataset contains just over 20,000 articles each of fake and real news. Let’s see some example articles from this dataset:

Fake News Detection With Python: Setting Up the Environment

For setting up the environment, we’ll first download the datasets from the above link and have them in our working directory. We’ll name the CSV with real news as real.csv and the one with fake news as fake.csv. Next, we’ll install the following libraries, which we’ll use for the various steps involved in this tutorial.

- pandas: To load and process the CSV datasets

- nltk: Natural Language Tool Kit; for preprocessing the news article texts

- scikit_learn: To build and evaluate the fake news detection model

Data Preprocessing for Fake News Detection Using Python

First, let’s read the data in real.csv and fake.csv. We’ll use pandas for this:

import pandas as pd

# The CSVs contain 4 columns: title, text, subject, and date

# We'll only use the text for our analysis

real_news = pd.read_csv('real.csv', index_col=None)[['text']]

fake_news = pd.read_csv('fake.csv', index_col=None)[['text']]

# Let's combine these two into a single dataframe, with labels

all_news = pd.concat([

real_news.assign(label='real'),

fake_news.assign(label='fake'),

])Now that we have the working dataset ready, let’s proceed with the next steps.

Cleaning and Normalizing the Text

Next, we’ll preprocess the text content in our news articles so it’s easier for the model to analyze and classify. Essentially, we’ll be removing some features of the text that are not very helpful in classifying it as real or fake. We’ll first do some basic string processing to simplify the text. After that’s done, we’ll use the nltk library to do some routine natural language processing tasks on the text. Let’s see the code for this:

import nltk

import string

# Download some NLTK resources if not already done

nltk.download('stopwords')

nltk.download('wordnet')

nltk.download('punkt_tab')

# For string cleanup

char_translation = str.maketrans('', '', string.punctuation + string.digits)

# For NLTK operations

stop_words = set(nltk.corpus.stopwords.words('english'))

stemmer = nltk.stem.PorterStemmer()

def article_preprocessor(article_text):

# Basic String Cleanup

article_text = article_text.lower()

article_text = article_text.translate(char_translation)

# NLTK Operations

# Stem the Text Into a List of Words

words = nltk.tokenize.word_tokenize(article_text)

# Filter Out Stop Words

words = filter(lambda word: word not in stop_words, words)

# Stem The Words to Their Basic Form

words = map(lambda word: stemmer.stem(word), words)

# Return the Result

return ' '.join(words)

real_news['text'] = real_news['text'].apply(article_preprocessor)

fake_news['text'] = fake_news['text'].apply(article_preprocessor)

'''

Example:

Before Processing:

WASHINGTON (Reuters) - The head of a conservative Republican

faction in the U.S. Congress, who voted this month for a

huge expansion of the national debt to pay for tax cuts...

After Processing:

washington reuter head conserv republican faction us

congress vote month huge expans nation debt pay tax cut...

'''In the above code, we apply an article_preprocessor function to the whole dataset. Inside the function, we start by converting the text into lowercase and then remove the digits and punctuation. Our assumption is that these features would not help us tell apart real news from fake news, and hence they aren’t necessary for our model. We then use the nltk library to further simplify the text using NLP techniques. First, we tokenize the text into a list of words. Second, we filter this list by removing stop words. Stop words are the common words used in text, such as ‘the’, ‘in’, ‘as’, ‘for’, etc. These too may not be very relevant for our classification task. Finally, we stem the words to reduce them to their root form by removing affixes. For example, ‘intelligence’ and ‘intelligent’ would become ‘intellig’. This further reduces the number of unique word tokens in the dataset, making it easier for the algorithm to process.

Stemming is different from a similar process called lemmatization. The latter is also used in NLP to reduce words to their simpler forms, but the result of lemmatizing a word is always a dictionary word. While adding more processing steps makes the text simpler and the program run faster, it could also lead to a loss of data features that reduces the performance. We can always add or omit some of these steps and evaluate the model performance to see what works best.

Read more about stemming and lemmatization here.

Feature Engineering

After the previous steps, we have datasets that contain cleaned and normalized text. But the machine learning algorithms that we’ll be using do not understand strings of text; they understand only vector data. Therefore, we’ll be converting the text data into vectors, which can be used as inputs to train, test, and predict using machine learning algorithms.

For our algorithm, we’ll be converting each article text into a vector where each word token occurring in the article becomes a dimension and the token count becomes the corresponding magnitude. This is known as the bag-of-words approach and is one of the simplest approaches we could be using. The sklearn provides us a CountVectorizer class to achieve this. Let’s look at the code below:

from sklearn.feature_extraction.text import CountVectorizer

from sklearn.model_selection import train_test_split

# Split Data Into Training and Testing Sets

x_train, x_test, y_train, y_test = train_test_split(

all_news['text'], all_news['label'],

test_size=0.2, random_state=50,

)

# Initialize Vectorizer and Vectorize Data

vectorizer = CountVectorizer()

x_train_vectorized = vectorizer.fit_transform(x_train)

x_test_vectorized = vectorizer.transform(x_test)In the above code, we first split the dataset into testing and training sets using the sklearn.model_selection.train_test_split function. We pass the text and label columns as the inputs and expected outputs, respectively. We also specified a test_size=0.2 , which means a random 20% of the data will be used for testing, while the rest will be used for training. To ensure that the randomly selected testing and training splits stay consistent between successive runs, we specify a random_state. This ensures a fair comparison if we are tweaking the preprocessing or any other steps involved in the algorithm and comparing the model performance between two runs.

After splitting the dataset, we obtain the training and testing inputs x_train and x_test and their corresponding expected outputs y_train and y_test. We vectorize the inputs using the CountVectorizer that we initialized earlier.

Note that we use fit_transform for the training data, while we only use transform for the test data. This is because the model, while being trained, is not supposed to know about the features of the test data, and hence it cannot consider that data for fitting.

While we’ve used the Count Vectorizer to demonstrate the simple bag-of-words approach, there are other methods that could be used instead. Some of these include Word2Vec, Doc2Vec, TF-IDF (Term Frequency-Inverse Document Frequency), and GloVe (Global Vectors for Word Representation). Similar to preprocessing, we can try different methods here too and check which one performs best.

Building and Training the Fake News Detection Model

From the previous step, we have a dataset containing vectorized news articles and corresponding labels (’real’ or ‘fake’). Using this, we’ll be building a predictive classifier model that can classify a given article as 'real’ or 'fake'. There are multiple classification algorithms such as K-Nearest Neighbors, Logistic Regression, and Naive Bayes. Ready implementations of these algorithms are provided by the sklearn library. For demonstration, let’s use the Naive Bayes algorithm. The code for building and training the model is below:

from sklearn.naive_bayes import MultinomialNB

# Initialize the Model

classifier = MultinomialNB()

# Train the Model

classifier.fit(x_train_vectorized, y_train)In the above code, we used the sklearn.naive_bayes.MultinomialNB class to initiate a classifier, and then we fitted the training data in the classifier. This completes the training, and the model is ready for use.

Read more about classification algorithms here.

Evaluating the Model

To evaluate the model we constructed in the previous step, we’ll be running it on the test inputs and comparing the predicted outputs with the actual outputs that we already know. We’ll be measuring the performance using the metrics accuracy, precision, recall score, and F1 score. These can be calculated using inbuilt methods of the sklearn library. Let’s see what these metrics meanAccuracy

- Accuracy:

- Proportion of correct predictions

- Calculated as: (true positives + true negatives) / (true positives + false_positives + true negatives + false negatives)

- How many news articles did we correctly classify as fake or real?

- Precision:

- Proportion of true positives to total predicted positives

- Calculated as: (true positives) / (true positives + false positives)

- How many of the news articles that we classified as fake are actually fake?

- Recall:

- Proportion of true data points identified as true.

- Calculated as: (true positives) / (true positives + false negatives)

- Of the total fake news articles, how many could we identify?

- F1 Score

- A score that combines precision and recall

- Calculated as: (2 x precision x recall) / (precision + recall)

- Balances both recall and precision while identifying fake news.

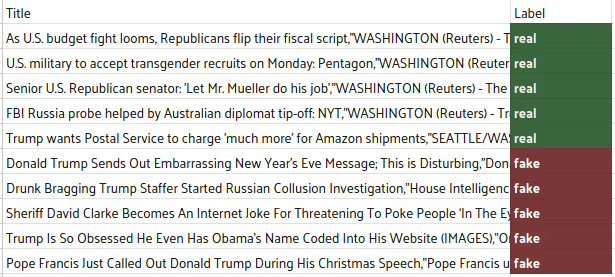

Note that the above definitions are generic, and ‘true’ refers to a news article that is truly fake, and not a ‘real’ news article. Some snippets of news titles and their expected classifications are shown below:

Example Of Real and Fake Articles From The Dataset

Let’s see how to test the model and compute the performance metrics:

from sklearn.metrics import accuracy_score, precision_score, recall_score, f1_score

# Evaluate the Model

y_pred = classifier.predict(x_test_vectorized)

# Calculate Performance Metrics

# By Comapring y_test and y_pred

accuracy = accuracy_score(y_test, y_pred)

print(f'Accuracy: {round(accuracy,2)}')

precision = precision_score(y_test, y_pred, average='binary', pos_label='fake')

print(f'Precision: {round(precision, 2)}')

recall = recall_score(y_test, y_pred, average='binary', pos_label='fake')

print(f'Recall: {round(recall, 2)}')

f1 = f1_score(y_test, y_pred, average='binary', pos_label='fake')

print(f'F1 Score: {round(f1, 2)}')

'''

OUTPUT:

Accuracy: 0.95

Precision: 0.96

Recall: 0.95

F1 Score: 0.95

'''Accuracy is a naive, absolute metric that is not frequently used to evaluate models. We can select one of the other 3 metrics to optimize our model, depending on our use case. For example, we can use precision for news analysis or monitoring tasks. Here, we need our working dataset to be as large as possible. So we wouldn’t mind if a few fake news articles make it through our filter but we do not want to be discarding real news articles because they were wrongly classified as fake. Recall, on the other hand, maybe more suitable for consumer apps. Here our goal could be to aggresively minimize the fake news being shown to the users, even if that means we discard some legitimate news articles that are wrongly labeled as fake. As we can see, there is a trade-off between both the approaches. F1 score is a sort of a middle-ground, a hybrid of precision and recall. It can be used suitably.

Conclusion

In this article, we looked at how we can detect fake news using Python. Essentially, we downloaded a fake news dataset from Kaggle and used it to train a machine learning model that classifies a given a news article as fake or real. We evaluated it on a portion of the original of the dataset that we had set aside for testing, and evaluated the model performance using the metrics accuracy, precision, recall and F1 score.

Depending on our case, we can use one of these scores to further optimize the model. To improve the performance, we can:

- Add/Remove/Modify Text Preprocessing Steps: Preprocessing simplifies the data and reduces the computation cost but it might lead to loss of useful features in the data.

- Use a Different Vectorizer: The core model works on the vector we construct with the string data. A bag of words approach like we used here is simple for compute but leads to some data loss in terms of word sequence in the text and so on. Trying out different vectorizers will help us figure out the optimal one.

- Use a Different Classifier: Different classifiers are suited for different kinds of classification problems. Ours is a text classification problem and we can experiment with other classifiers to see if we get better results.

Fake news being circulated online can be detrimental to individuals, organizations and entire societies, especially when the world is grappling with pandemics and wars. Detecting fake news in an automated, scalable way using Python is a critical step in news data analysis workflows, building consumer apps, and any other task that involves working with large amounts of news data.